Désolé, cet article est seulement disponible en Anglais Américain. Pour le confort de l’utilisateur, le contenu est affiché ci-dessous dans une autre langue. Vous pouvez cliquer le lien pour changer de langue active.

Most of the video ecosystem is agreed on one thing: Ultra HD or 4K will happen, but none of us agree yet on when and how. It is clear is that the standards will play a key role in determining the timeframe. In previous cases, say with DASH for example, an industry body above competing standards has been the most effective way to speed things up. It seems like two separate initiatives are coalescing independently, which may be a good thing. CES 2015 was the place to be and many UHD issues where addressed. To get a clearly picture, I spoke to someone at the heart of it all. Here is my interview with Thierry Fautier VP of Video Strategy for Harmonic Inc.

Q: First of all Thierry can you confirm that UHD was a prominent them in Las Vegas this year?

A: Most certainly, Ultra HD was one of the most prominent topics at CES 2015. This was the first major show since some key announcements of Ultra HD services in late 2014:

- UltraFlix and Amazon that offer OTT services on connected TVs,

- DirecTV that announced a push VoD satellite service (through its STB that stores and then streams with decoding in the Samsung UHD TV),

- Comcast that announced a VoD streaming service directly through the Samsung TV, with content from NBC.

Q: But these services require UHD decoding on a Smart TV?

A: Yes that is a first takeaway from CES: TVs are the ones decoding UHD for now, STBs will start doing so from second half of 2015.

Q: Beyond the few services just described, what signs did you see that UHD might really start becoming available to all from 2015?

A: Several, for example the announcement that Warner Bros has decided to publish UHD titles using Dolby’s Vision process. Netflix also announced that its Marco Polo series would be re-mastered in HDR (but without announcing which technology). So on content and services side, things are moving on HDR.

Q: Do you see HDR as one of the first challenges to solve for UHD to succeed?

A: I do. The plethora of HDR demonstrations by all UHD TV manufacturers was impressive. I will not go into the details of the technologies used, it would take too much time and this may change (due to the standardization effort of HDR). The only thing I would say is that there is a consensus in the industry to produce UHD TV, it will be around 1,000 nits (against 10,000 for the MovieLabs spec) [a NIT is a measurement of light where a typical skylight lets in about 100 Million Nits and a florescent light about 4,000 Nits]. On the technology side, LG is the outsider with its OLED technology that was shown in 77 inches, while the rest of the industry seems to focus on the quantum dot (Samsung announced in 2014 that it was abandoning OLED).

This suggests that we will have HDR in 2015; the real question is on which spec HDR will be based? You now understand the eagerness of studios to standardize HDR.

Q: so is HDR a complete mess?

A: HDR is actually already in the process of standardization, but with more or less synchronized work:

- ITU began a call for a technology which was answered by Dolby, BBC, Philips and Technicolor.

- EBU / DVB is working on a standardization of HDR mainly for live broadcast applications. The goal is to finalize the spec in 2015.

- SMPTE is defining the parameters required for the production of HDR content. A first spec (ST 2036 for HDR EOTF and ST 2086 for Metadata) has already been ratified.

- MPEG is currently defining what to add to the existing syntax to HDR in a single layer. The outcome is expected in July 2015.

- Blu-ray is finalizing its HDR (single layer) specification and also hopes to freeze it mid-2015 to optimistically hoping to launch services in time for Christmas 2015. Blu-ray is working in coordination with MPEG and SMPTE. Note that Bly Ray will then follow specifications for streaming / download under Ultra Violet.

- The Japanese stakeholders, through NHK, announced they would now develop their own HDR for 8K.

So you see the diversity of the various proposals that exist, the new “Ultra HD Alliance” should bring some order here. The clue I can give is that to have a Blu-ray UHD service in 2015, this must be done with chips that are already in production in 2015. I think we will see more clearly at NAB (April) and that by IFA (September) everything will be decided, at least for the short term, aligned hopefully with DVB / EBU Ultra HD-1 Phase 2.

Q: I gather what is now called the “Ultra HD Alliance” is actually something different to what I described in my last blog and that it’s first challenge is getting HDR sorted out?

A: Indeed Ben, the Ultra HD Alliance is a group of 10 companies primarily from Hollywood and the world of TV in addition to Netflix and DirecTV on the operator’s side. The first goal of this group is to get HDR (High Dynamic Range) specifications under control (see diagram below) and the quality measurement from the output of the UHDTV. In this regard, Netflix will launch a certification of the quality of HDR streaming; HD to start and we can imagine that this will be extended to UHD. Note that no manufacturers have yet been invited, which is surprising as they are the ones actually going to do most of the job!

Q: So the organization we spoke about last time is something else?

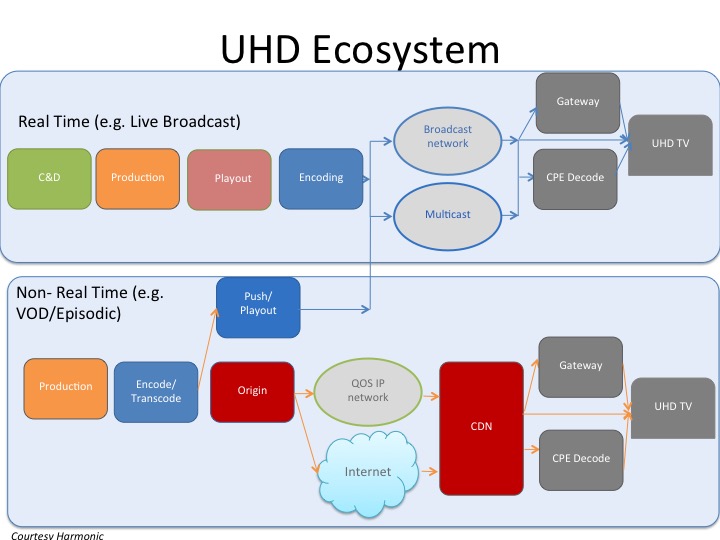

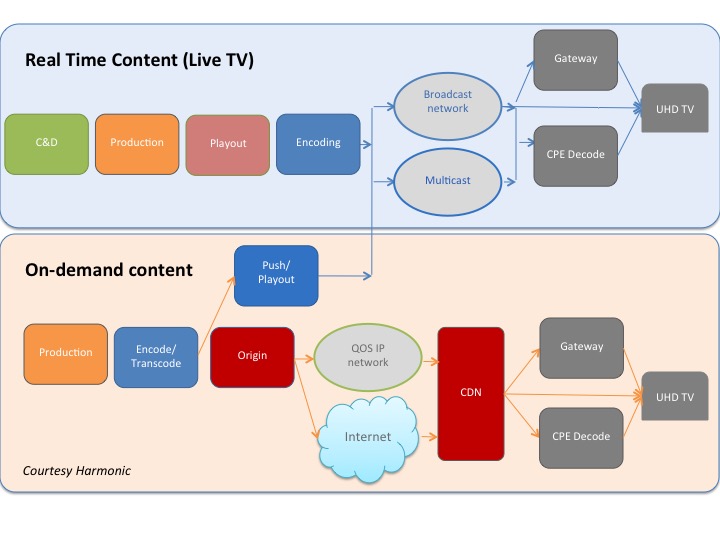

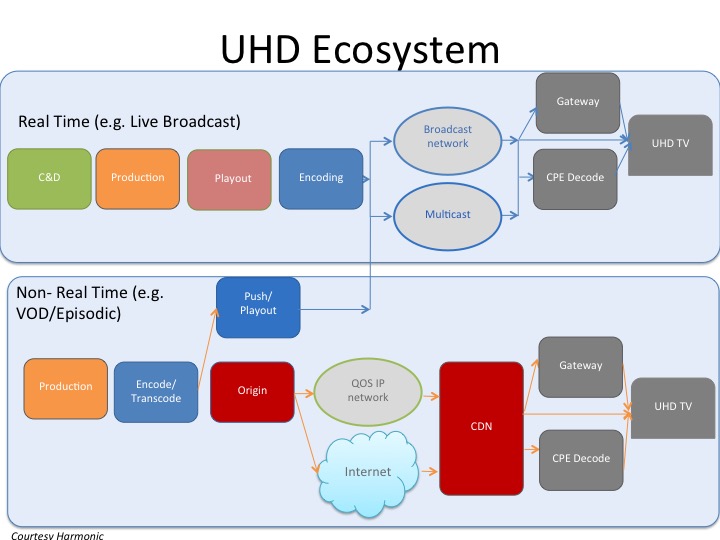

A: Yes Harmonic, with a group of 40 other companies have proposed to create an Ultra HD Forum to take care of the complete UHD chain from end-to-end, including OTT, QoS, Push-VoD, nVOD, adaptive streaming, Live and on-demand. After various meetings that took place at CES, discussions are on going to ensure that the two groups (UHD Alliance and UHD Forum) work closely together.

Q: so as in other areas would you see the need for at least two governing bodies to manage UHD standards?

A: In the short term yes. The UHD Alliance is focussing a single blocking factor at the moment i.e. HDR/WCG/Audio , but will have a broader marketing and evangelization remit. The UHD Forum on the other hand is starting out with and ambition of end-to-end ecosystem impact. In the longer term there is no reason the two entities might not merge, but from where we stand today it seems most efficient to have the two bodies with the different focuses.

Q: Does HDR make sense without HRF (High Frame Rate)?

A: Well I'd say on the chip side there is still a challenge as 2 times more computing power is required; HDMI is also a limiting factor as bandwidth increases. Early services might get away with just a 25% increase. Most encoder providers are not yet convinced that the effort will produce improvements justifying the disruption brought by the doubling of frame rate. We have been asking for 60/120 fps formal testing but we’ll need to wait for the new generation cameras especially in sport, as opposed too currently used cameras often equipped with low shutter speeds coming from the film world where 24 fps is the norm. At IBC’14, Harmonic together with Sigma Designs, was showing encoding of UHD p50 and up conversion in a Loewe Ultra HD TV set to 100 fps, with a motion compensated frame up conversion powered by Sigma Designs. Visitors from the EBU saw the demonstration and were pleased with the result. This will be one of the most contentious topics in the months to come, as the value might not be able to counterbalance the impact on the ecosystem.

Q: What about the chipset makers?

A: I visited Broadcom ViXS, STM, Sigma Designs who all had demos at different maturity levels to support different types of HDR. They are all waiting for a standard for HDR.

Q: So to wrap up can you zoom out of the details and give us the overall picture for UHD deployment?

Ultra HD is a technology that will revolutionize the world of video. Making UHD requires a complete rethinking of the workflow, from video capture, production to the presentation. This will take several years. I’m not even talking about spectrum issues to get this on the DTT network....

As you can see, the specifications are still in flux when we talk about “real Ultra HD”, the technologies are being set up and should be ready in 2016 to make live large scale interoperability testing during the Rio Olympics and also have the first services to OTT or on Blu-ray Disc that supports the HDR and WCG (Wide Color Gamut).

(Disclaimer 1: Thierry is a friend and is passionate about Ultra HD, he was invited speaker at both NAB and IBC last year on UHD, disclaimer 2: Although I have written for Harmonic in the past, I’m not under any engagement from them).

To be continued....